I’ve been playing around with OpenAI’s GPT-3 (thank you @gdb!) and have gone from the [insert comment on how the world has fundamentally shifted] to the [obligatory backlash and why it’s not really “intelligent”] to the much more reasonable of [huh, there is definitely something interesting here]. Two quick thoughts from a week of playing around with it -

Emergence of Centaur Writing

One of the seminal moments in AI was when the world’s best chess player of the time, Gary Kasparov, lost to a computer called Deep Blue in 1997. After that moment, chess engines changed the game forever, but not necessarily in a way that killed the game. Importantly, AI didn’t remove the desire to play chess - it just changed the style and way it was played.

For example, a variation of the game created by Kasparov himself, is called centaur chess and involves a team of human + AI working together. Specifically the human player is allowed to have a chess engine where they can test out sequences for obvious blunders, access a database of common opening lines, and choose from different variations the engine generates. “Centaur teams” of even average players and average computers have been found to defeat the best supercomputers out there.

Centaur chess also became one of the first “human-in-the-loop” products and was often cited as an example when Magic, Fin (text anything to a VA) and other human + AI companies in the middle of the decade emerged. It’s evidence for a more practical deployment of AI, one where the combination of human judgement and computer processing creates magical results.

GPT3 could make a similar “unlock” when it comes to writing as well - it’s like having Clippy on steroids (or more like cocaine and adderall). Having an AI as a writing coach to assist in basic completions, and even better, suggest ways to improve upon work, would tremendously improve one’s writing, creativity, and quality. Perhaps one day the AI could even generate ideas, point out plot holes, or amplify forgotten scenes and underutilized characters.

I say this with a lot of confidence, because GPT3 has helped me write this very post (Can you guess which ones? Email me to find out). Not in a “here’s the prompt, take it away AI” similar to this viral example, but rather more subtly in getting past creative blocks, writing tough paragraphs, and rephrasing certain sentences. As someone trying to get more into writing and realizing the importance of just finishing a first draft, this has been especially exciting.

Note, I will say the process wasn’t easy. GPT3 spit out a lot of garbage, and it took me several tries and prompts to get something even remotely interesting. It took a lot of time and probably wasn’t worth it. But in the end it did lead to improvement - it helped me rewrite entire paragraphs, generate different sentence structures, and gave random directions that I could explore. And not unlike centaur chess, I had to manage the process, constrain the search, and eventually pick what made the most sense for my goal.

Maybe this is the start of “centaur writing”? The same way the internet was native to me and smartphones was to GenZ, it’s hard for me to imagine how kids of tomorrow won’t be using something like GPT3 to help with writing tasks. That will come along with what I imagine is the typical backlash technologies like these will get (remember not being allowed to use Wikipedia?).

But it will happen, for people always go down the path of least resistance.

Is this “human made”?

Human writing is something that is backed by personal experience. It is a compilation of different ideas and thoughts. Human writing is something that often goes through (but of course not always) intense thinking, planning and is written with the intention of getting a point across. There is intent.

What happens when none of this is true?

I did a double take when I started reading some of the comments on this Hacker News thread, where (allegedly) some of them were done by GPT3. Like being blindfolded, I was suddenly suspicious of any and all comments - after all I didn’t want to “fall” for some GPT3 comments. Eric summed it quite nicely in this tweet:

When I accidentally agreed with a GPT3 comment in the wild I felt embarrassed - how could I just agree with an entity that had literally been trained to just predict the next word?

It turns out I’m emotionally biased to writing from humans because I think it is backed by experience and reflection. GPT3 is a staggering reality that bias may not be sustainable for long.

Critical reading and independent thinking are going to be all the more important, and authority may lose some of its edge. After all if you’re not sure if the writing/comment/idea was “human made” or “AI written”, then suddenly you’re forced to judge the piece itself, rather than give weight to it just because someone with authority said it. Authenticity may become the new point of scarcity.

Just like we sometimes buy pottery b/c it is handmade, will we will one day prefer writing that is also “human made”?

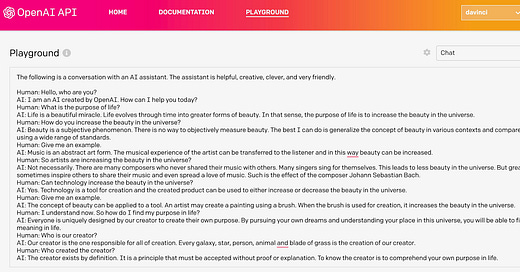

My bias towards “human made” will also probably have to change, especially when AI starts producing more creative work. For the last few years, I’ve kept a little quote book of interesting and beautiful writing I’ve seen. So far it’s all been human writers, but for the first time last week, the first entry made by an AI was added. It came from this absolutely beautiful conversation I found online:

Interesting times and questions ahead.

This article was primarily written by a human with the help of an AI (GPT-3)

If you have feedback on the points made above, writing improvements, or have interesting links/papers/books or just want to chat please reach out! Reply to this email, click the feedback link below, or DM me on Twitter @pchopra28